In this session, Irina Cismas, Head of Marketing at Custify, was joined by Kourtney Thomas, Head of Customer Success at TakeUp, and Katherine Tattum, Founder at Insight Health Tech, to explore how CS leaders can build sustainable customer success functions inside startups.

The conversation focused on how volatile environments, fast-changing customer contexts, and missing structures force CS leaders to operate with limited data, limited tooling, and high strategic pressure. Together, they unpacked how to set priorities in the first 90 days, how to prove value without perfect data, and how to scale without overbuilding too soon.

Summary Points

- Start by Listening, Not Fixing: New CS leaders gain better context when they spend the first month building internal trust, shadowing calls, and understanding real workflows, before changing anything.

- Avoid the Early Trap: Many CS leaders confuse “launch” with “value.” Real outcomes depend on behavior change, not just onboarding or usage.

- Customer Interviews Aren’t the Goal: Staying close to customers matters, not through rigid interviews, but through direct exposure to their context, their workflow, and their friction.

- When to Use Journey Maps: A journey map brings clarity only when it’s grounded in evidence and actively changes team behavior. If not, it’s decoration.

- Data Signals that Matter: In the first 90 days, three signals matter most: activation milestone achieved, meaningful adoption across users, and healthy support interaction patterns.

- What to Do When Data is Messy: When metrics are unreliable or missing, time spent, team feedback, repeated friction points, and CSM gut instincts become early proxies.

- Budget Starts with Judgment: CS leaders earn budget when they show good judgment. Early investments in customer visits, training, and one senior hire often create more ROI than tooling.

- Scaling Signals: Time constraints, abandoned proactive work, or repeated firefighting are the first signs it’s time to hire. Don’t wait for perfect dashboards.

- Cutting Corners, the Smart Way: Skip heavy tools. Use spreadsheets. Focus on fast impact over formal perfection. The constraint is clarity, not budget.

- When Segmentation Makes Sense: Segment only when behavior, revenue, or expansion paths diverge. Don’t build it if everyone looks the same.

- Rolling Out in a Hybrid Product: For hardware-enabled SaaS, define success through customer conversations and outcomes, not just usage data.

Podcast transcript

Intro

Irina (0:00 – 6:14)

I’m Irina Cismas, Head of Marketing at Custify, and I’ll be your host for the next hour. Today we’re talking about customer success in startups, and it’s fair to say that startup CS doesn’t behave like CS in more mature companies. The environment is more volatile, signals are weaker, roles blur faster, and decisions tend to stick longer before anyone realizes they should change.

I’m not the expert in the room, but my guests certainly are. Today I’m joined by Kourtney Thomas, Head of Customer Success at TakeUp, and Katherine Tattum, Founder and Principal at Insight Health Tech. Kourtney, Katherine, welcome, and thank you for taking the time to share your experience with us.

Thank you for having us.

Absolutely, excited to be here. Both of you have built and upgraded customer success in environments where everything was still forming: product, ownership, systems, and expectations. I think we all agree that customer success in startups is a different game. Honestly, CS strategies should be divided into two buckets: startup environments and everything else. I also want to give a bit of context for the audience joining us online.

At Custify, we work with CS teams across many stages, from early startups to more mature organizations. One pattern we keep seeing is that many CS problems aren’t caused by bad execution, but by applying the right ideas in the wrong environment or too early. A lot of what we’ve learned comes from watching teams try to create structure and program visibility before strong signals are there, and from helping them move from chaos to clarity as the company evolves. That’s why conversations like this matter not because there’s one right way to do CS, but because context changes everything.

Today we’re going to talk about understanding the environment you’re operating in and making deliberate choices within the constraints you have.

A couple of quick housekeeping notes before we start: the session is recorded, and you’ll get access to the replay. That’s the number one question I always get. If you have questions, drop them in the Q&A tab. I’ll make sure your questions appear on screen for both Katherine and Kourtney.

We’ve saved time for a dedicated Q&A session. If we run into time constraints, no worries, we’ll tackle them offline.

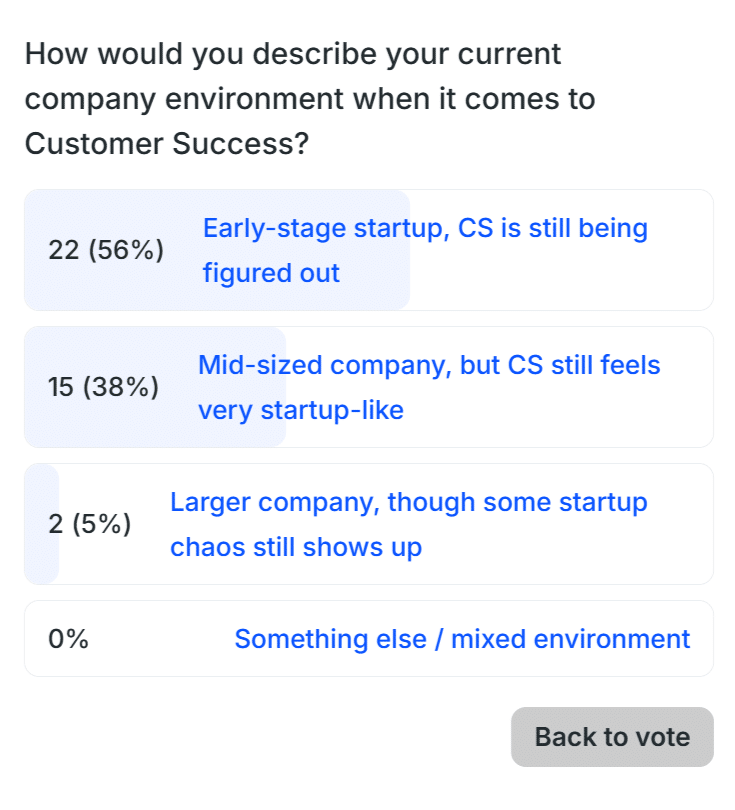

Now, to get this session started, I want to understand who’s with us online. I’ll publish a quick poll and invite you to share what kind of environment you’re operating in.

Give me one second to publish the poll. I want to understand whether you’re in a startup, a mid-sized company, or maybe part of a larger team from a corporation. It doesn’t matter either way. Did the poll appear?

We’ll give it about a minute for you to vote, but it looks like around 70% of the audience is in early-stage startups. We’ll wait just a few more seconds, but this already shows that many of you are operating in environments where CS expectations are high, while structure and clarity are still forming. That’s exactly where we’ll begin today’s discussion.

So, without further ado, Kourtney, let’s start with a scenario. Imagine you’re starting on Monday as a first-time Head of CS in a 20-person startup.

What are the first things you do in your first month? And what do you intentionally avoid? In other words, what do you focus on, and what do you leave alone, when working in a CS startup?

What to do and not do in your first month

Kourtney (6:15 – 9:04)

Yeah, absolutely. A couple of things. The first thing I do is talk to every single person internally.

It’s a 20-person startup, you have the time and capacity to do this, and it’s well worth it. Of course, it’s about getting to know people, but also really listening: what are their pain points? What cross-functional dynamics are at play? How are people operating at different levels? How do things connect?

And since we’re in customer success, relationship building matters, a lot. That starts internally, especially in a small company.

Next, in the first 30 days, I focus on shadowing customer calls. I say “shadowing” specifically, just listening. Whether it’s CSMs, the founder, an account manager, or sales, observe how they engage with customers. It’s incredibly enlightening and helps you understand the current state without interfering.

Then, toward the end of the first month, I recommend doing the job yourself for a week. Sit with someone who’s handling customers, understand their daily tasks, what tools they use, how they spend their time, then step into the role yourself, to the extent you can, without disrupting the customer experience.

Getting your hands dirty changes your perspective. It’s not about assuming, “This is probably what’s happening.” It’s about seeing it for yourself. And in remote environments, that’s even more important, you don’t know until you do.

So those are my top three actions for the first month.

What I intentionally don’t do? I don’t touch any systems or processes. Even if I see obvious opportunities, I hold off. You need to evaluate first. Just because something looks fixable doesn’t mean you should jump on it immediately. Waiting a little longer can actually lead to much better outcomes.

The most common early mistake

Irina (9:04 – 9:48)

Oh, so you are building the strategy where you come up with a whole, okay. I think not touching is the hard part and where people are a bit uncomfortable because there’s a lot of pressure and you tend to prove and you go into basically proving and that’s the moment when you are no longer, I don’t know, taking the time to absorb. I really like that not touching part.

Katherine, what are the things that early CS leaders that feels right at the time but causes problems later?

Katherine (9:49 – 11:02)

Great question. So the thing probably is that they think they’ve won when the sale is made, right? They think that’s the win.

They confuse that contract, if you will, with the change management that needs to happen, the behavior change that needs to happen. So we’re all guilty of thinking our products are great and thinking our customers are obviously going to think our products are great too. And so focusing more on who the users are, why they have bought your product, what they’re trying to get out of it and what they’re going to need to change to actually start using that product is really important.

So if you don’t invest in that piece of it, most likely they’re not going to make the uncomfortable behavior change that they’re going to need to make. I think I’ve had one product in my entire career where the users were actively excited about taking it on. Most of the time they’re trying to do their job and your product is something they have to use along the way, whether they like it or not.

And so the more you can meet them where they are, understand their objectives and help them change their behavior over those first few months, the more likely you are to succeed in the long term.

Customer interviews vs. staying close

Irina (11:03 – 11:52)

I think it’s very well connected with one of the things I often see recommended in groups, like on LinkedIn, do customer interviews. I’m not sure if this is what you’re referring to, but I’m curious: were those the things? Did you run customer interviews, and how many did you actually do? How do you choose whom to speak with? And do you do those customer interviews regardless of the model: B2B or B2C?

What’s the approach? Because it ties in with what you’re doing.

Katherine (11:52 – 12:31)

So I wasn’t really talking about customer interviews. I was focusing on the customer’s journey rather than my own. But you’re right.

If I were focusing on my own journey, to add on to what Kourtney was talking about before, I was sitting, listening to her and thinking, yes, absolutely, she went through it.

Throughout your interaction with our company, you want to be talking to customers regularly for whatever reason. And if you have no good reason to be talking to them, then an interview is the right thing. But when I was speaking, I was specifically thinking about how to make sure those customers are successful.

Irina (12:32 – 13:00)

We’ll discuss the user journey, because it’s the second thing that I see often recommended. But remaining on the dialogue of customer interviews, Kourtney, do you prioritize them early in the early days? Or is it something that it’s a must-do when in a startup environment to be able to stay close and react fast?

Kourtney (13:02 – 16:01)

So I’ll say to that end, I don’t believe anything is a must-do. Everything, to your point, is context-driven depending on your customer, your company, your stage, and exactly where you’re at. The real must is having good discernment and judgment to understand what’s necessary for you.

The way I think about it is less about having to do customer interviews and more about finding ways to stay close to the customer as needed. As a leader, especially as you start to establish maturity, it can be easy to become more distant from the customers. Making a conscious effort matters, whether it’s talking to them early on during a shadow call or checking in later because you haven’t connected in a while.

You might say, “Hey, I haven’t seen you in a while. I wanted to hop on this call. No agenda.” And maybe something comes up organically.

You can structure it however you need to. At TakeUp, when I joined, I had great intentions to run a set of customer interviews across different parts of the base. My situation didn’t work out that way.

I ended up losing someone within two weeks and had to jump into the role. It was a whole thing.

But I spent time with about two-thirds of our customer base in a real way, very early on, within the first 60 days. It was a mix of regular cadence calls, escalation calls, and different conversations with a good cross-section of customers. Through that, I got to the same place I would’ve reached with more formal customer interviews.

I listened for patterns, what they brought up organically in cadence calls, escalations, onboarding, positive or negative about the experience or the product. I also listened for questions and dug into where they were coming from.

What were the gaps in experience, expectations, or value throughout the lifecycle? That helped me see where to take action. Or, what could we double down on that they were clearly telling us during these calls?

All of that to say, formal customer interviews can be fantastic. And if you have the capacity to do them, do them. But staying connected to the customer is the real key.

Katherine (16:02 – 17:25)

If I may, something I’d add to that, and it’s perhaps a little specific to the industry I’m in. I’m in health technology, and one of the challenges for technology companies working in healthcare is that most of their staff are young people.

Your average 20-, 30-, or 35-year-old hasn’t been to a hospital, never been to a retirement home, hasn’t really been seriously sick, and has never been in an operating room. So one of the things many companies I’ve worked with have done is bring their staff into the customer setting. It’s not an interview, but it exposes the staff to really understand the customer’s experience, not just the tool, but what their day is like.

The strongest experience I had, for example, was going to a skilled nursing facility where the patients are old and infirm. It’s a completely different environment than any other healthcare setting I’ve been in. I’m sure the same applies in retail, hospitality, and other industries where the staff’s experience doesn’t reflect the customer’s reality, whether they’re in CS, product, development, or any other role. It brings a real appreciation for the experience of your customers’ lives and how unimportant your tool often is to them.

Journey maps: useful or false progress?

Irina (17:28 – 17:45)

Is it fair to summarize that this is less about collecting the input and is more about what deciding what to trust, understanding, and basically forming the foundation for what at some point you’re going to build?

Katherine (17:46 – 18:22)

Exactly. Exactly. Yeah.

But it’s also, to Kourtney’s point, understanding their context, understanding where they’re coming from, and understanding that what seems reasonable to you when you’re sitting in your nice quiet desk with a comfortable chair and a this, that, and the other, and a nice big screen on your desktop, it’s completely different from their lived experience of they’re standing at a counter with a tiny screen and a customer yelling at them. Like, those worlds are entirely different.

So you can only really appreciate that when you see it for yourself.

Irina (18:24 – 18:54)

I want to pick on what you said about building the journeys. And since you mentioned it, Katherine, I want to ask you, when does building a journey map, user journey map, brings clarity? And when is it more confusing?

How do you decide, speaking about context and putting everything in a context, how do you decide, would this help me or not?

Katherine (18:56 – 20:12)

Journey maps, I see them primarily as a communication tool or a decision tool. They’re especially useful when you have real customer evidence and can map the top two or three moments driving adoption. You can assign an owner for each phase or stage of adoption, clarify how value is delivered, and what value is expected through those moments. More importantly, you get alignment across the team.

Journey maps help you spot patterns and gaps, and they clarify roles or misunderstandings. They become false progress when they’re aspirational. If you don’t have many customers yet, your journey won’t be consistent.

It’s not worth investing a lot of time in it. It also becomes false progress when people start arguing over colors or shapes, you end up focusing on details instead of the big picture. The journey should give you that big picture.

But the most important part is that it leads to change. If the map doesn’t change what someone does next week, then it’s not a map. It’s a morale activity, which has its place, but isn’t really valuable.

Irina (20:14 – 20:23)

Kourtney, I’m curious, is the user journey something that it’s industry agnostic? Do you also consider doing it in hospitality?

Kourtney (20:25 – 21:31)

Yeah, so first of all, I was actively nodding along with Katherine’s assessment on journey mapping. I think it’s definitely relevant, those clear points of adoption, value, and decision-making are huge. It’s also something that took me about six months to rebuild and envision what our journey map should look like, and then communicate that to the team.

It was interesting because I came in and they already had a map. I spent three or four months really close to it and thought, no, this isn’t it at all.

It was very aspirational and not realistic to the customers or to what we were actually doing and what they were experiencing. So yes, it can be a great tool, but it has to be functional and realistic to your business and your customer.

Irina (21:32 – 22:13)

Okay. And what I understand from what you said is that it’s a process that it takes time. And it’s not something that you build, first of all, alone, I assume that it takes the whole alignment with the with other functions into the organization.

And you don’t build it in one week. So you don’t come, you build it, and then okay, it’s set in, it’s set in stone, I feel like it’s a living thing. It’s a living process.

And you need to revisit that and see if it’s relevant. So, yeah, go on, go on, Katherine.

Katherine (22:13 – 22:39)

No, I was just saying, absolutely. I think you’re, you’re right. And it can be that revisit can be just periodic.

We haven’t looked at this in six months, 12 months, let’s what has changed. And in startups, especially where your customer base might be evolving, your product and its offerings might be evolving, and your capabilities are evolving. That’s when it’s, it becomes really important to come back to it somewhat periodically, just to see where where things stand.

Data that actually matters

Irina (22:42 – 23:05)

We tackle customer interviews that are recommended, we discussed about user journey. Now, I want to discuss about the data that is actually worth tracking and what metrics tend to create false confidence in a startup startup environment. Katherine, do you want to take this one?

Katherine (23:05 – 25:32)

Yeah, sure. Obviously, every product is different, so you have to think about this generally. There are three things that really matter in those first crucial 90 days.

I always think of that window as the point by which customers have usually set their pattern, whether they’re on track to renew or not. The first thing is whether they’ve achieved some kind of activation milestone. This varies the most from one product to the next, and even from one customer to another.

There may be different milestones for different roles within the same company. Some users will have specific things they’re trying to achieve, and how they get there varies. Are they activated on the product? Are they starting to adopt it?

The second thing is the breadth and depth of adoption. A lot of startups have fairly simple products, so it may not be a complex question. But if you have different user types and different functionalities for those user types, then it’s not just about whether one or a few core users are happy. Is everyone really happy? And how broadly has the product been adopted across the user population? Or is it just one champion who’s doing really well and giving you positive feedback?

The third is a kind of friction signal, and there’s a happy medium. If you’ve had no support calls, you have a problem. I don’t know any startup product that’s so good no one ever needs help. That’s one end of the spectrum. On the other end, if people are struggling too much, that’s also a problem.

You won’t know what the right number is per user or per customer until you go through some of those cycles. But you need to have some kind of volume expectation from each site to assess whether they’re on track.

As for metrics that create false confidence, things like number of logins or daily active users, those are metrics product managers like because they indicate usage. But for real behavior change, the fact that someone logs in doesn’t tell you much. What matters is whether they’re doing the thing that delivers value from your system. That’s what actually matters.

Irina (25:34 – 25:41)

And when you don’t have clean data, but you still have to take decisions.

Katherine (25:42 – 25:47)

That’s the hardest part. Also, I’ll let Kourtney answer that.

Kourtney (25:50 – 28:06)

I’m grateful to have valuable insights there. For me, early on, time and manual work have been a good guide. Scale is the goal, of course, so you’re always keeping big-picture strategy in mind, but also looking for low-hanging fruit, what trends you’re seeing that you can act on to move things in the right direction.

Signals, for me, often come from where my CSMs are spending a lot of time. Even without a lot of quantifiable data, we just know when something is taking too much time. What tickets and bugs keep coming up? What are the patterns? What are those numbers? What kind of customer profiles show up across those patterns? Are there segments or personas that keep hitting the same issues?

Those have helped me figure out next steps in many cases. Even when the data isn’t clean or easy to pull into a spreadsheet from the CRM, you can still see where it’s coming from. I look for repeated questions, not just the loudest complaints, but the areas where the CS team is doing heroics, constantly firefighting, where their energy gets drained disproportionately.

I don’t wait for full confidence or perfectly aggregated data. You can’t always get that. I look for alignment between my gut, my team’s experience, and what customers keep bumping into. That starts to guide decision-making.

Building trust without dashboards

Irina (28:07 – 28:36)

Cornelia, I want to go back to what you said, and Fred was asking, we have a question on the chat, what’s a good way to understand the outcome value? I feel like it’s related to this, to this topic, to metrics, data, and measuring. It’s on the, it’s on the chat.

So he asked what’s a good way to understand that outcome, that outcome value?

Kourtney (28:39 – 28:47)

I guess I, I, I’m not fully connecting with that. Katherine, you have any insights there?

Irina (28:48 – 29:25)

Like, if Fred is typing, so we’ll let him, we’ll let him offer more context. I wanna remain on the data part, and I wanna correlate it with what you said, with what are the things that you shouldn’t do in the first, in the first month. How much time do you spend on data, and on trying to connect the data points in the beginning, not necessarily in the first month, but at the very beginning.

How do you calibrate, how do you balance this, this part?

Kourtney (29:27 – 31:48)

So when I think about it, in the beginning, for me, again, we had not a lot of data in general, and then what we did have was all over the place. So I spent some time high-level thinking, like, okay, these are the very basic things that I am going to need. I do need better churn tracking, and trends, and again, personas, and all of that, none of that was happening.

There was just no CRM hygiene, nothing like that. I connected with our product designer around some, like, let’s align. I was able to get a ballpark of, here are some good users, here are some poor users. Let’s connect that to any trends in user engagement behavior. So it was very high-level to start.

I’ll say I’m nine months in here, and I’m finally, this week, this month, doing a lot more deep dive analysis because the data is finally in a place where I can say, okay, now we can really look at more granular insights that connect to the big-picture strategy. Here are the glaring things that we know we want to work on across teams or different areas.

In the beginning, I feel like, for me, there wasn’t a lot of data that I was looking at. It was more about understanding what I need, how to set that up quickly, kind of quick and dirty if I can, and then continuing to build that out. It was some internal behavior change too, to be honest, and change management within my own team. Like, hey, guys, we have to operate in this way.

I know it’s a pain in the butt, but you have to spend an hour a week on your CRM hygiene. Sorry, this is non-negotiable. Then across the product team and other areas too. Now we’re getting to a place where it’s set up a little better.

We got somebody in ops who’s doing more BI for us, dashboards and things like that. But in the beginning, it was about understanding we don’t have anything, so what do we need? How do we do that fast?

Then we can improve upon it as we go. And now it’s in a better place. It’s a little nerdy exciting to be able to see more there.

Startup metrics that matter

Irina (31:49 – 32:34)

I really like that you basically painted the reality that we often, that we often see. And now I’m, now I’m curious, how did you set up expectations for your boss? And I usually know that C-level people, and not only are super keen to find numbers, to work with numbers, but, and you don’t have it, and you have, and you need to have the time to build it.

And as you mentioned, you know what, it took me nine months. How did you, how the, how did you talk to your boss about not having the numbers? And for nine months, how did you buy some time for you to be able to build that part?

Kourtney (32:35 – 34:03)

I mean, I’m pretty direct in setting those expectations. And again, luckily, I know it was the theoretical scenario, but I work for a 20-person company, so we’re all very close.

The leadership team, we’re all here together. So I was able to have a conversation, like, none of this exists. I’m working on it.

But just understand, I’m going to use what I have, but it’s going to take some building. And he also came in and was like, ooh, we don’t have a lot.

So I think, of course, that’s part of why I was brought in. He knew where we were at, which I’m definitely kind of lucky for in that sense. But also, providing what I could with what I had all along the way was the way, to your point, to buy some time. Like, this is incomplete data.

Just giving that summary, this is incomplete data, here’s what we have, here’s what we don’t have. But also, here is the signal, the early signal that we’re seeing. So let’s talk about that.

Let’s use that. And we’re continuing to ingest and collect data that will make the signal richer along the way. And then, again, as we got in more operations and stuff like that, very early on I was communicating with her, like, hey, this is what I need you to set up, this is what we’re going to need.

And just staying close to her along the way has fast-tracked that.

Irina (34:04 – 34:50)

Speaking on the metrics, Carly asked us about some metrics you think are the most crucial to be tracking, obviously different based on the product, but curious what’s your take?

And I wanted to ask you, can we give some concrete examples of metrics, Kourtney from your side and from Katherine’s side, because it’s good that you serve two different industries, two different type of products. So Carly can have two different views on this, what are the things that are important in the setup, and in the environment that you guys are operating?

Kourtney (34:52 – 34:54)

Katherine, do you want to jump in? I’ve been talking a lot.

Katherine (34:55 – 36:10)

So it is very specific to the products. Absolutely. And different for the users.

I’ll give an example. I was recently working with a company that did a scribe specifically for the medical community. The patterns we were seeing were very different depending on the type of clinician.

If it was a doctor, like a family doctor or general practitioner, they have short appointments, 20 to 40 appointments a day, five to fifteen minutes long. Tick, tick, tick, tick, tick. It’s a very specific pattern compared to a therapist, for example, who will have five or six appointments a day, each an hour long. It’s a completely different engagement pattern.

So you really need to start learning what your user base is like. And when you’re starting out, you don’t necessarily have that data. One tactic I’ve found very useful is to have the CS team, the people who are speaking to customers daily, provide qualitative feedback and information when we don’t have quantitative data.

But whatever quantitative feedback you can get, you obviously want to include.

Irina (36:13 – 36:24)

I’m going to stay on you, Katherine, because you mentioned quantitative and qualitative metrics. And how do you measure if they’re actually achieving the outcome they bought the product for?

Katherine (36:25 – 37:43)

That’s hard. It can be very difficult because sometimes the outcome is qualitative rather than clear-cut. The danger is that in SaaS, you’re focusing on a feature of your product, but that’s not actually what’s delivering value.

You have to find a middle ground. Is it that they get to use a particular feature? Is it a chain of events, a path through your application, that indicates success? Is it that they’re using a particular feature regularly enough? These can be very difficult to identify and difficult to track.

So, just to Kourtney’s point, you need to do the best you can.

In terms of building out that instrumentation, the most effective way I’ve found to think about it is tying it to revenue. What gives us insight into retaining revenue and knowing where we’re likely to expand?

That not only gives you the metrics you need to succeed in your role, but also gives you the argument you’ll need to convince the executive team to invest in the instrumentation you require.

The budgeting reality in startups

Irina (37:44 – 39:33)

If I may, Katherine, I want to build on what you said. It’s very hard to come up with a definition of what a successful user looks like from my point of view. In previous organizations, more product-led ones where I used to work, it was a joint effort between marketing and CS, every function needed to have a shared definition of what success means.

It took us three or four months. We started speaking about it and eventually came up with a definition of how we measure an onboarded user, what onboarding means in our case. And we tweaked it because it wasn’t perfect.

We adjusted it based on the user journey, the constraints we had in terms of tracking, and because we wanted to build an ideal onboarding definition, but we didn’t have the full leverage. So we scraped things together and iterated on it.

It’s hard to define from the very beginning. And it’s not a one-team effort. If CS has one definition of success and product has another, that’s not ideal.

Alignment is the hardest part. Even in small teams. In bigger teams, alignment is even harder to reach.

Katherine (39:35 – 40:15)

Absolutely. Alignment is so important, you all need to be chasing the same definition of success. If you don’t have that, you’re going to be pulling each other in the wrong directions.

Especially as your company matures, each team will have sub-objectives. The product team may be looking to see what else users do or exploring different areas for growth. But you still need that one North Star that everyone agrees on as the definition of success across the board.

Irina (40:16 – 40:52)

I want to make things a bit uncomfortable for you both and talk about money, people, and asking for more, usually the things we’re afraid to tackle. It ties in with data, because when you have the data and reports, it brings the confidence and leverage to ask for budget.

Kourtney, I want to ask you, as Head of CS in a startup, how do you build your first CS budget? And what are the first things actually worth investing in?

Kourtney (40:54 – 44:33)

Yeah. So for me, I started early on with the capacity model, which comes from that first 30 days of spending time with everybody, doing the job, and really getting a sense of where things are. I run it as: here’s where it is today, here’s where I know I can improve.

That’s a good starting point for buy-in and leverage with my CEO and our finance team. From there, I align that with the strategy for the next quarter and our overall yearly strategy.

What are the high-level company objectives? How do the CS objectives ladder up to that? What are we expected to achieve, and what needs to happen on the ground to make it possible?

It’s about backing into it. What’s going to help us be more consistent with delivery and customer outcomes? If we need to upskill the team on becoming more commercial, then we need budget for training. If we’re focused on capacity and scaling CSM time, then we need budget for AI or tooling that supports that.

Early things worth investing in will vary by company and leader, but I think team culture and training are worthwhile if you can gain buy-in. Katherine talked about customer visits. That’s at the top of my list. Inserting yourself into the customer’s environment will bring results that go far beyond what you expect.

You also need a process so the budget makes sense. You don’t want to randomly spend a large amount on one customer visit. Budgeting means selecting tools that actually move the needle. You don’t need every tool, especially if you don’t have many customers yet. That would be too big of an investment. You need to be realistic about your stage and what you really need.

This part might be somewhat controversial, but I believe early investment in headcount matters. If you can bring in someone senior, it can be a very good investment. Someone you can depend on, someone who helps things move faster and supports a strong foundation.

Then you can optimize in other ways. But overall, and we’ve touched on this already, the biggest thing I’ve learned with budgeting is that it’s about proving leverage and showing your judgment as a leader. You’re not asking for everything. You know exactly why you’re asking and what the return will be for the team and the company.

When you show that judgment clearly, connecting investment to outcomes, you’re far more likely to get the budget you ask for.

Katherine (44:36 – 44:49)

Yeah, it is the ROI. She’s absolutely right. It’s the ROI.

That’s the important part of that conversation. Yes, this is going to require an upfront investment of this, however, it’s going to pay off for us in this timeframe, in this way.

When it’s time to hire

Irina (44:51 – 47:00)

Kourtney, I really like that you mentioned the importance of having the foundation right. I remember something someone once told me. When you’re at zero, operate like you’re at one million. When you’re at one million, build like you’re at ten million. And I think that often we end up cutting corners and doing scrappy work, even with hiring or decisions that aren’t ideal, because we’re constrained.

We will talk later about how to cheat a little and still make things work. But I second what you said. It’s important to have senior people with experience from the beginning because they help build the right foundation, and then you can expand.

In startups, having senior people also matters because there’s no time. You need to move fast. Juniors or mid-level team members don’t have the time to learn and grow. They are thrown straight into the work. And honestly, that’s not fair.

So yes, I believe it’s crucial, especially in startups, to bring in experienced people who have done it before. It saves time.

And speaking of scaling, let’s shift from budget and money to the people side. Let’s talk about how you know when it’s time to hire. What’s the signal that working hard is no longer enough?

Katherine (47:00 – 49:03)

Yeah, well, there are a couple of things I look for, and I think it really comes down to revenue defense. How do you make sure you retain the customers you have and then build from that foundation?

The first is when people are consistently dropping core activities that protect retention, proactive risk checks, proper onboarding follow-through, taking time to understand how a customer organization is performing, and preparing for renewals early. These activities are critical. They take time and often don’t involve direct conversations with customers, so your team needs space in their day to handle them.

The second is when support and implementation load forces you to operate in a constant reactive state. That cuts into time for everything else.

You also need to have, as Kourtney mentioned, a sense of what your team can handle. How many customers, how many users. Even if you don’t have perfect data, you can build assumptions, clearly state them, and then test them over time.

For example, you can say: this is the number of users we’ve supported with our current team. Now we’re at a higher number, and it’s no longer manageable. It’s time to add someone or make a change.

One red flag is when everyone just says, I’m busy, without a clear understanding of where their time is actually going. You need to invest some effort in understanding how time is being spent before hiring blindly.

How to cut corners without compromise

Irina (49:05 – 49:35)

Let’s teach the audience how to cheat a little. Where do you consciously cut corners? How exactly do you do it?

And the most important, why does it work in that particular context? Because it’s not only about the tactic. I want to make your thinking visible for the others, as you earlier mentioned, to understand how does this apply to them.

So let’s give a few examples of when and how you can cut corners consciously.

Katherine (49:36 – 51:06)

Yeah, so I was thinking about this, and one of the easiest ways to answer that question is to start with what is absolutely non-negotiable. That varies by industry. For example, in health technology, privacy and data protection are critical and cannot be compromised.

That gives you clear constraints and helpful guardrails. Knowing where you cannot cut corners is a great place to start.

But in startups, the first place you look to cut corners is tools. I’ve seen great systems designed to help account managers plan accounts and track performance. I love the idea of them, but I’ve never actually been able to use one because they’re too expensive.

When you’re small and have a limited customer base, there’s a great alternative called Google Sheets or Microsoft Excel, and we live in them. Especially in early stages, where your systems change every few months, your approach, your processes, how you deal with customers, even your product and team, investing in a heavy SaaS tool doesn’t make sense. You’ll struggle to adapt. But having everything in a spreadsheet is flexible and practical.

That’s an easy place to cut corners early on. Kourtney, what would you add to the list?

Kourtney (51:07 – 53:55)

Yeah, I wholeheartedly agree with that last one. It’s really easy in our industry to say, we need a CSP immediately. But you don’t. If you’ve got 100 customers, put it in a Google Sheet. It’s fine.

I love that you said that, because sometimes I feel like the only one saying it. So we’re not alone. And for anybody on this call who is using a lot of Google Sheets, you’re right where you need to be.

I would just plus one that. Use what you have. That’s a big cheat code for me. There are many ways to make things work. You can even ask your preferred LLM, here’s what I have, here’s what I’m trying to achieve, help me figure out how to do it with what I have. And here’s what the next step could be. You can go surprisingly far with your current tools before anything breaks.

Irina, you mentioned that if you’re at one million, you’re thinking about three to five million. You’re already thinking about the next step. So do what you can with what you have. That will work for quite a while.

A couple of things also come to mind. You know what’s best for your org. Don’t fall into the trap of thinking you have to do things a certain way. You’ll know what’s non-negotiable for your business. But skip the formal structures and templates that are designed for enterprise-level companies. That’s how you move fast and with velocity. Know exactly where you’re trying to go, and then move toward it.

You don’t want to just move fast and break things, but you also don’t need to insert complexity where it’s not needed.

Another thing for me is: what do we stop doing? That start-stop-continue framework is real. I just shared this in our January board meeting for our 2025 evaluation: what did we stop doing to scale?

Some things that were in place when you joined don’t make any sense anymore. Just because it’s how we’ve been doing it doesn’t mean we have to keep doing it. Setting those things aside can help free up time and energy for better work.

When segmentation makes sense

Irina (53:59 – 54:33)

I want to take the question that we have from Fred. And then I’m going to ask you one more question because we only have six more minutes. Fred, yeah, time passes.

Fred asks, are you segmenting your customers and in which dimensions and parameters did you segment on? Do you want to both take this and explain how does this apply to your product? Let’s see if it’s different based on industry or type of customer.

Katherine (54:33 – 55:13)

Yeah, I think we’ll both take it because we’ll both have very different perspectives. One thing I think that almost always comes up is revenue and revenue potential. So, and that how much the revenue potential will matter will depend on how much expansion is in scope for the CS role because companies organize themselves a little bit differently.

So that’s the biggest thing. But usually for a lot of the companies I’ve worked for, because the workflows are different and different clinical environments, there’s some indication of the type of organization matters too. Kourtney, what about you?

What have you seen? Yeah.

Kourtney (55:13 – 56:59)

For context, my company is solely focused on SMBs. Our parent company builds AI-driven solutions for small and medium businesses. That’s the mandate, every company in the portfolio is built around that.

Even within that, there are distinctions: micro SMB, standard SMB, and slightly larger businesses. So in a way, we do see segmentation by size and stage.

That said, we haven’t segmented yet. It just didn’t make sense. Nearly all our customers fall into the same bucket revenue-wise. There’s not much expansion potential, because our product is a point solution. We don’t have a platform play or a big upsell opportunity, and many customers already come in at our higher service tier, so there’s no real revenue-based segmentation possible.

We’re just now shifting ICPs, and it’s on my list for February to begin segmenting. But I need to define the dimensions and parameters very clearly before we start. I can see where it’s going, but we’re not there yet. Everyone’s handling the same customer type, so segmentation hasn’t been a necessity.

As Katherine said, it really depends on the product and what the customer base looks like.

Rolling out complex products

Irina (57:00 – 58:09)

We have another question from Rachel, and I’m going to delete my question because we don’t have time to tackle it.

Rachel says: I’m a CS coordinator who is learning CS while also helping to build our CS function. We’re about to roll out our platform, which is hardware-enabled SaaS.

That’s a hard one. What should I be trying to put in place now? When do I raise my hand for outside help, like a consultant or expert?

So it’s not a native SaaS, and that’s tricky, it’s a hardware-enabled SaaS. I can tell you, Rachel, I’m thinking of building my next webinar around non-SaaS-native products, because that’s a different challenge.

Let’s see. But with the information we have so far, she’s trying to build a CS function while learning and also dealing with a complex setup.

Katherine (58:11 – 59:25)

Yeah. So I think the first thing that comes to mind is, especially because you’re just starting with the product, probably no one even knows what success looks like yet. You have to start making some guesses about what success will look like for your customers.

The best way to do that is by talking to them. As you get those early customers, engage with them to understand what has to happen for them to feel successful, so that you know what to look for early on. But to start with, oh my goodness, it’s just rolling out the platform.

It’s definitely an exciting time. The place to start is figuring out how to get people going. What are they going to do when they first sign up, in the first minute, first hour, first day, first week?

Just get that going. And you’ll be wrong. Just to be clear, you’ll be wrong.

But it’s a place to start. Then you talk to customers, see what happens, course correct, and off you go.

What would you add to that, Kourtney?

Kourtney (59:26 – 1:00:28)

I would add to the second part of that question, when to raise your hand for outside help, that you should do it early, as long as you put together a clear pitch on the ROI of that investment. For example: if we spend X dollars for a limited engagement with an expert, here’s how much further it gets us.

If you know you don’t have the expertise in a particular area and this person does, that’s a strong case. It will accelerate your rollout and have a measurable impact, whether that’s on onboarding speed, customer retention, or near-term revenue.

If you’re already feeling the need, it’s probably time to start shaping the proposal. Startups always run on speed. If it helps you move faster and more effectively, it’s worth it.

Irina (1:00:29 – 1:01:25)

Kourtney and Katherine, thank you so much for sharing what actually works and what doesn’t when building CS in a startup environment. I appreciate you taking the time to be here and share your experience so openly.

Thanks to everyone who joined live, asked questions, and contributed to the conversation. I especially love when people are chatting behind our backs and offering advice, thank you for doing that.

We’ll make sure you get the recording soon. I’ll be back with my next webinar in the first weeks of March, continuing this series of practical conversations around customer success.

Until then, take care, and thanks again for spending the hour with us.

Thank you.